#Aria2leagel full

Using -no-clobber prevents each of the 10 wget processes from downloading the same file twice (including full relative URL path).

#Aria2leagel download

The above loop will start 10 wget's, each recursively downloading from the same website, however they will not overlap or download the same file twice.

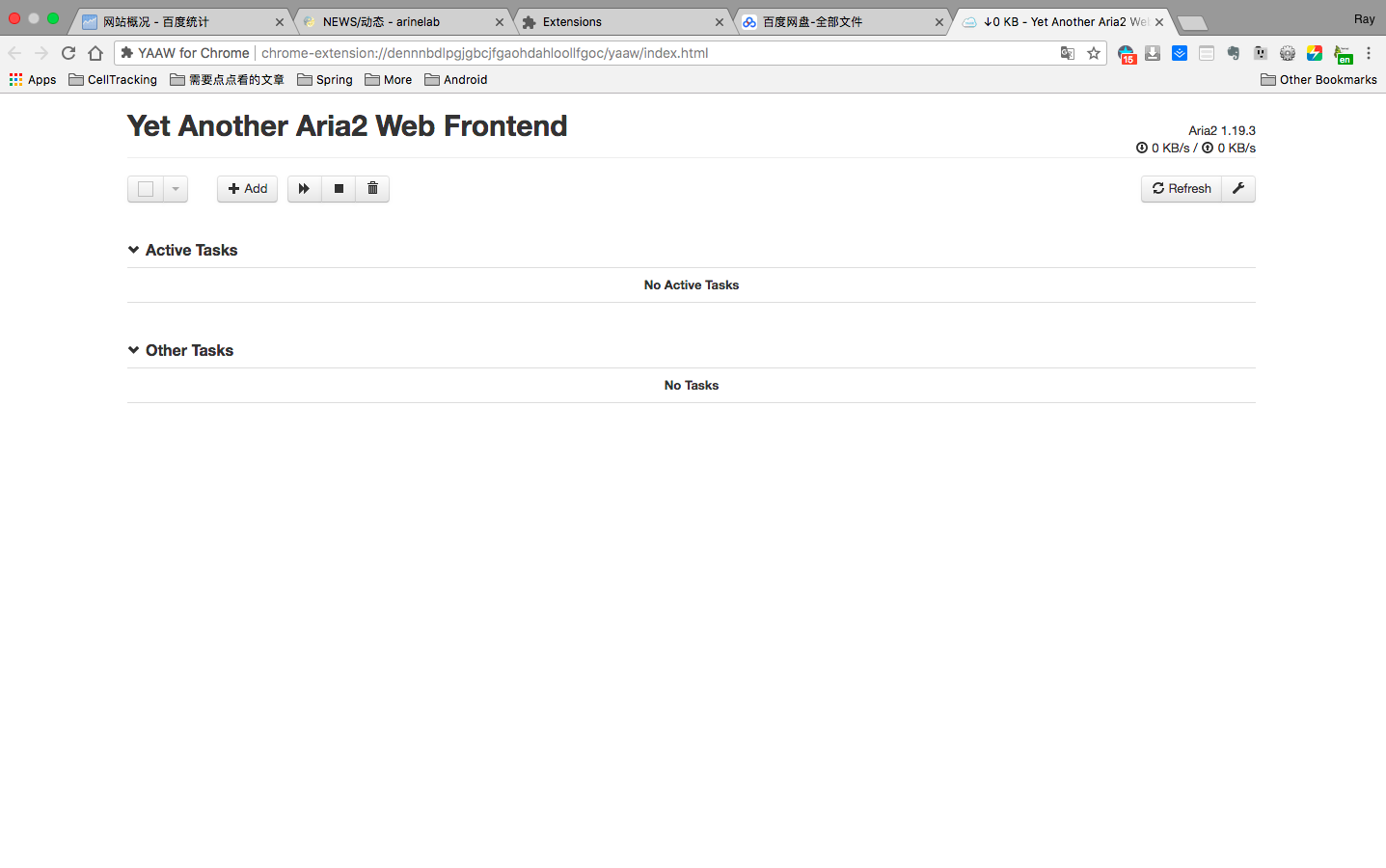

Multiple Simultaneous Downloads Using Wget Recursively (unknown list of URLs) # Multiple simultaneous donwloads If you already have a list of each URL you want to download, then skip down to cURL below. If you are doing recursive downloads, where you don't know all of the URLs yet, wget is perfect. By that I was able to copy huge files with 2x 100 MB/s = 200 MB/s in total. I bound the two wget threads through -bind-address to the different ethernet ports and called them parallel by putting & at the end of each line. This was the easiest way to sync between a NAS with one 10G ethernet port (10.0.0.100) and a NAS with two 1G ethernet ports (10.0.0.10 and 10.0.0.11). F, G, H and the second thread syncs everything else. The first wget syncs all files/folders starting with 0, 1, 2. Wget -recursive -level 0 -no-host-directories -cut-dirs=2 -no-verbose -timestamping -backups=0 -bind-address=10.0.0.11 -user= -password= "*" -directory-prefix=/volume1/foo & This is for example how I sync a folder between two NAS: wget -recursive -level 0 -no-host-directories -cut-dirs=2 -no-verbose -timestamping -backups=0 -bind-address=10.0.0.10 -user= -password= "*" -directory-prefix=/volume1/foo & By that you could start wget multiple times with different groups of filename starting characters depending on their frequency of occurrence. Ĭonsider using Regular Expressions or FTP Globbing. For usage information, the man page is really descriptive and has a section on the bottom with usage examples. Use the -j flag to specify the maximum number of parallel downloads for every static URI (default: 5). If the same file is available from multiple locations, you can choose to download from all of them. You can use the -x flag to specify the maximum number of connections per server (default: 1): aria2c -x 16 Using Metalink's chunk checksums, aria2 automatically validates chunks of data while downloading a file like BitTorrent. It supports downloading a file from HTTP(S)/FTP and BitTorrent at the same time, while the data downloaded from HTTP(S)/FTP is uploaded to the BitTorrent swarm. aria2 can download a file from multiple sources/protocols and tries to utilize your maximum download bandwidth.

The supported protocols are HTTP(S), FTP, BitTorrent, and Metalink. From the Ubuntu man page for version 1.16.1:Īria2 is a utility for downloading files. Receive live notifications of your downloads or your selected downloadsĪria2 is developed by Tatsuhiro Tsujikawa ().īitTorrent is a registered trademark by BitTorrent Inc.As other posters have mentioned, I'd suggest you have a look at aria2. Change a single download or aria2 general options Download files from the server to your device through DirectDownload Display the information about every file in download View statistics about peers and server of your downloads

Start downloads by clicking on links on the browser Add Torrents with the integrated search engine Add HTTP(s), (s)FTP, BitTorrent, Metalink downloads You can also manage aria2 instances running on external devices thanks to the JSON-RPC interface.

#Aria2leagel portable

Aria2App is your portable server-grade download manager backed by aria2 directly on your device.

0 kommentar(er)

0 kommentar(er)